India Tightens Oversight of AI Diagnostic Software, Makes Regulatory Licences Mandatory

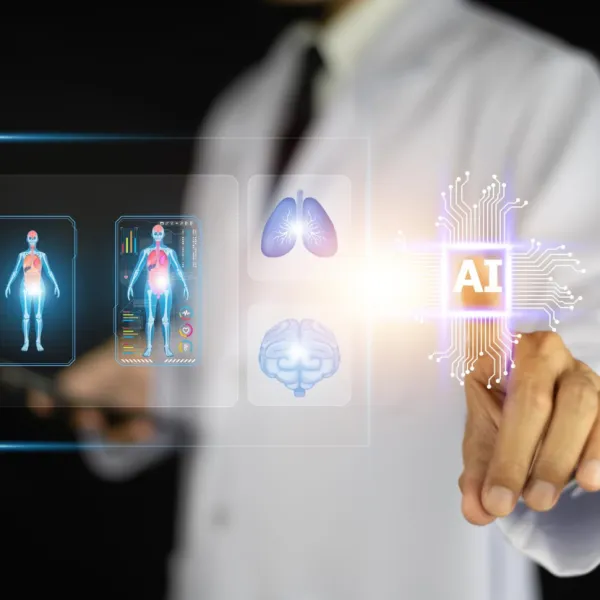

Under the new framework, AI software used to detect or diagnose medical conditions has been classified as a Class C medical device, a category indicating moderate to high risk to patient health.

India has strengthened its regulatory grip on artificial intelligence in healthcare by bringing AI diagnostic software under the medical device framework, making regulatory licences mandatory before they can be deployed in clinical settings.

The Central Drugs Standard Control Organisation (CDSCO) has issued a directive requiring developers to obtain manufacturing or import licences, marking a significant shift in how AI health technologies are governed.

The decision addresses a long-standing regulatory gap in India’s digital health ecosystem. Until now, many AI-based diagnostic solutions have operated in a grey zone, often labelled as research tools or wellness products despite being used for clinical decision-making.

With the rapid expansion of AI in medical imaging, screening, and disease detection, regulators have been under increasing pressure to introduce clearer oversight mechanisms aligned with patient safety standards.

Under the new framework, AI software used to detect or diagnose medical conditions has been classified as a Class C medical device, a category indicating moderate to high risk to patient health.

This includes AI systems that analyse X-rays, CT scans, MRIs, and other imaging data to identify conditions such as cancer, cardiovascular disease, and neurological disorders. As Class C devices, these AI tools are now subject to rigorous pre-market approval and post-market monitoring requirements.

“The reclassification brings AI health software into the same regulatory policing as traditional medical devices such as CT and MRI machines”, said the officials.

As part of compliance, AI developers must demonstrate clinical safety and performance, including validation studies conducted on Indian patient populations to ensure accuracy in real-world healthcare settings. This requirement reflects concerns that AI models trained on foreign datasets may not perform consistently across diverse demographics.

In addition to licensing, health-tech companies must establish robust quality management systems and follow strict post-deployment surveillance protocols. This includes mandatory reporting of adverse events, such as misdiagnoses or delayed diagnoses caused by algorithmic errors.

Regulators expect these measures to create clearer accountability across the AI development lifecycle, from design and training to real-world clinical use.

Stay tuned for more such updates on Digital Health News